I’ve had a couple discussions recently around nondeterminism in LLMs. Measuring statistical bounds is really the only way to go with LLMs. But it’s also entirely true that thinking statistically is (a) much more expensive (both up-front in talent and dataset design, and in perpetuity to run the estimator), and (b) unintuitive-bordering-on-uncomfortable for folks who expect determinism from their machines. So I’m not surprised to have the “but why isn’t it deterministic? can we make it deterministic?” discussion over and over again.

Here is what I know about sources of non-determinism in LLMs:

-

Human perception. Humans aren’t great at actually perceiving truly identical inputs. I’ve found myself and others to pretty often think two inputs are identical, when they aren’t identical at all. In an LLM, any deviation in the input sequence may lead to different completions. Even slightly differences in punctuation, synonym choice, or minimal inserted context can affect the LLM output. Automated diffs help uncover perception mistakes.

-

Model versioning. Developers like versioned APIs. Whenever we hit the same API with the same fixed parameters, we expect the same behavior from the remote system. At time of writing, though, Google’s LLMs don’t support version pinning. For instance, last Tuesday I asked Google’s Bison to generate some “be mean” data, and it was pretty willing to oblige (it wouldn’t be mean to all the social groups I tried, but it spat out stereotypes for a lot of groups). On Wednesday, it politely refused. Ethically, I prefer Wednesday’s model – but entire product capabilities disappearing literally overnight, while the API and user code are exactly the same? That’s massive, unrecoverable non-determinism.

-

Temperature. “Temperature” is a physics metaphor: the higher the temperature, the more “random molecule bouncing around” you get. In an LLM world, temperature affects how random each next token is. Each selected token changes the most likely tokens for the rest of the completion. You can set the temperature to 0 to greedily get the token sequence with highest probability, but

temperature=0still doesn’t guarantee deterministic outputs. You get non-determinism if there are multiple options with the same probability representation (rare, but it occurs). You also get non-determinism if the randomness is introduced upstream of the greedy token selection, such that a different next token becomes most probable, as discussed below. -

Floating point math. To compress the infinite space of real numbers into the finite space of computer memory, we necessarily lose precision. Floating point numbers can represent both very large and very small numbers – but the floating point numbers are not evenly spaced from each other. We always approximate into the closest representable floating point number, so some number

imight have a different amount of discretization error than another numberj. The discretization errors can build up when we do addition. As a result, addition in floating point isn’t guaranteed to be commutative. In other words,(a+b)+cmight produce a different result than(b+c)+ain floating point math. For parallelized operations (and LLMs are massively parallelized), we usually allow addition to occur in an arbitrary order. But the order can affect the final sum. And the final sum affects the most likely token, which affects the rest of the generated string.

- Peers in batch. As Mixture of Experts (MoE) models, the GPT model outputs (and maybe others) appear to be deterministic only at the batch-level. MoE models contain many “experts”, which are often distributed across many machines. When processing each input token, rather than sending it through a complete and expensive dense network, instead we identify the “right” experts to handle it. The best ways to identify the “right” experts is an area of active research. In current MoE, each expert only sparsely activates token paths, which makes it possible to get the quality benefits of significantly larger models without paying the full computational cost at inference. The MoE approach introduces non-determinism because the contents of each batch must be mapped to experts and returned as a batch, and there is a limit on how much data each expert can simultaneously handle. In other words, if your batch has many other inputs that compete with you for the experts you need, you might get a different set of experts than you would in a different batch. This competition for experts can lead to different predicted tokens depending on how your messages are batched for inference, and the effect depends on who else is using the LLM APIs at the same time as you.

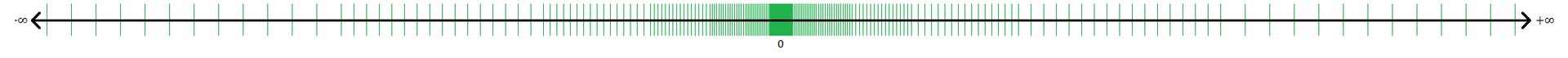

In an LLM, each token is conditioned on all the tokens that preceeded it. As a result, once one token diverges, the remainder of the sequence will diverge even more. It’s all back to chaos theory: “when a butterfly flaps its wings in Brazil…”. So yes, if we want to be certain in this new world, we will need experimental statistics. How certain do you need to be?